By: Kevin Gonzalez, VP of Security, Operations, and Data at Anvilogic

Ah, triage. Everyone's doing it with their shiny new copilots—essentially evolved chatbots with some MCP server or similar contraption chugging along with deep enrichment capabilities. But here's the reality check: these fancy assistants are good for helping with investigations, but they can't possibly be engineered to support every single customer with their own respective detection frameworks, schemas, and enrichment factors.

So let's cut through the noise and discuss how to build a triage agent whose sole purpose is eliminating the majority of the investigation process and getting you as close to response actions as possible. After all, triage is just you putting on your detective hat and doing some good old FBI profiling to determine whether an alert warrants a response.

Getting Your House in Order: The Foundation Problem

At this point, I can assure you that any reading material about where to begin automating your security ops processes with LLMs focuses on targeted, easy-to-automate areas. These areas—which are the easiest to automate—tend to be the most standardized. The industry is moving away from workloads that reflect the traditional SOAR’s playbooks or scripted workflows. They are trying to adapt in real time, triaging and investigating alerts without you having to map out every move. But here's what the industry isn't telling you: ensuring a strong detection engineering foundation paves the way for you to automate the whole thing.

Good data in, good data out—that axiom still holds true after all these years. If you've been following the evolution of agentic detection engineering, you know that modern detection creation can compress the scientific method from weeks to minutes while producing standardized, production-ready outputs. Let's assume you've achieved that level of detection engineering maturity—whether through Anvilogic or other security products that give you a strong baseline foundation. Where does that lead you?

Well, the next step is revolutionary: once you have structured and standardized outputs from your detection engineering process, you can do wonders with Agentic AI. This is where the compound effect of proper detection engineering practices really pays off.

Since every alert is standardized, we can apply an entire DDL (Data Definition Language) knowledge base—essentially a structured schema that defines how all our security data is organized and categorized. We can then process the components within that DDL with their respective domains (processes, IPs, entities, etc.), and explain each individually and effectively, allowing us to generate a set of facts for each and every alert.

The beauty of modern agentic detection engineering is that it doesn't just create individual rules—it builds detection outputs that are already enriched with MITRE ATT&CK mappings, threat group associations, and contextual metadata. This structured foundation becomes the input fuel for our triage automation.

Facts vs. Determinations: The Paradigm Shift

So what does this all mean? Of course, a process equals a process—that's a fact. But given every single alert, we can apply traditional data science and statistical methods prior to newer AI methodologies, which can be prone to error.

According to the Osterman Research Report, almost 90% of SOCs are overwhelmed by backlogs and false positives, with more than 80% of analysts reporting feeling constantly behind. A recent Trend Micro survey found that 51% of SOC teams feel overwhelmed by alert volume, with analysts spending over 25% of their time handling false positives. The SANS 2024 SOC Survey revealed that 66% of SOC teams reported they can't keep pace with the volume of alerts they receive, while enterprise environments regularly see 10,000+ alerts daily.

Things like simple prevalence counts (how many times have we seen this process?) and relationships (this process with these process flags is uncommon with this user/host) become new enrichment factors and NOT escalation factors as they were traditionally. The key distinction here is treating everything as a fact rather than a determination.

Additional enrichment includes:

- Malicious derivatives: Domain-level processing for anomalous process names in process paths

- IOC lookups: Real-time threat intelligence integration

- SLMs for process explanations: Small language models providing contextual understanding

- Statistical methods: Ordinal regressions, Learning-to-Rank (LTR), data mining techniques

- Confidence scoring: Leveraging detection engineer-generated confidence and severity levels

The Two-Phase DDL Approach

So now we've generated ourselves, with every detection, a fully enriched alert—both from a security perspective and a data science perspective. Next, we apply a secondary DDL for post-processing to actually recognize and understand all these new enrichment facts we just embedded based on traditional processing.

This is when LLMs do their fancy work—but here's the crucial difference from those other copilot approaches everyone's peddling.

Unlike traditional SOAR or Hyperautomation tools, agentic AI doesn't need playbooks or scripted workflows. It adapts in real time, triaging and investigating alerts without requiring manual mapping of every move. The distinction is critical: we're not asking the LLM to figure out what to investigate from scratch. We're giving it all the facts from our fast, mass-data processing and asking it to make intelligent decisions about which deeper investigations to perform.

Based on those initial facts, the LLM might decide to:

- Trigger additional research for contextual understanding

- Leverage costly enrichment integrations to collect supplementary information only when the initial facts warrant the expense

- Initiate behavioral analysis on related entities or timeframes

- Pull payloads for sandbox detonation when indicators suggest potential malware

- Correlate across multiple data sources for attack chain reconstruction

- Query threat intelligence feeds for supporting telemetry and threat information.

All of this deep investigation is orchestrated intelligently—the LLM uses the preprocessed facts to determine which expensive or time-consuming operations are worth performing, rather than running everything through a static playbook. This dynamic investigation process ultimately leads to the final determination, but it's the intelligent decision-making about what to investigate deeper that separates true agentic systems from basic automation.

Treating LLMs Like Machines, Not Buddies

Whether you know it or not, LLMs are still machines. And although they understand natural language, treating them as machines is much more effective than treating them like your senior cubicle buddy you're asking for advice.

We don't want to pre-prompt our LLMs with unstructured natural language text looking for outcomes. Instead, we should be giving them structured data and clear instructions in machine-readable formats.

Having all these previously generated "facts" from our detection engineering lifecycle and data science processing allows for exactly that. The agent doesn't need to research what SSH with the -F flag means—that knowledge is already embedded in the detection metadata from the agentic creation process. But the prevalence analysis, user behavior baselines, and statistical correlations come from the data science processing pipeline that sits between detection creation and triage automation.

The traditional approach asks the LLM: "Hey, can you help me figure out if this alert is bad?"

The agentic approach provides:

{

"alert_metadata": {

"process_name": "ssh.exe",

"prevalence_score": 0.02,

"user_context": "engineering_team",

"host_criticality": "high"

},

"enrichment_facts": {

"domain_reputation": "clean",

"process_ancestry": "legitimate_parent",

"network_indicators": "external_connection",

"temporal_analysis": "outside_business_hours"

},

"statistical_context": {

"user_baseline_deviation": 2.3,

"host_behavior_anomaly": 0.8,

"threat_intel_matches": 0

}

}

Enrichment Facts Generated From Alert

Then asks: "Based on these facts, determine what additional investigations are warranted and execute them dynamically."

The LLM might respond with something like:

{

"investigation_plan": {

"cost_justified_enrichments": [

"query_threat_intel_for_destination_ip",

"analyze_ssh_config_file_contents"

],

"sandbox_analysis": {

"required": false,

"reason": "no_file_artifacts_present"

},

"behavioral_correlation": {

"timeframe": "24_hours",

"entities": ["user", "destination_host"]

}

},

"reasoning": "High baseline deviation warrants deeper investigation of user behavior patterns and destination analysis"

}

Agentic Triage Outcome - Warrants Deeper Investigation

This intelligent orchestration of investigative resources based on initial facts is what separates true agentic triage from simple classification.

Expandability: Building the Attack Story Automatically

The next step, given all we can do within this pre and post-processing alert pipeline, is expandability. Maybe we found an alert that tells a beautiful story about how our external-facing web server just had a serving of RCE and implanted some sort of persistence on it.

Let's say an autorun registry key for the story's sake, and maybe this was a new RCE we didn't have detection for, so we detected the autorun registry key being implemented. There are at least hundreds of atomic-level detections happening on this host—from sysadmin to application-level activity. What would an Agentic Investigation approach entail?

Modern incident-centric models group alerts that are determined to be related to a single security event into an incident before being shown to an analyst. The analyst queue then becomes a prioritized list of incidents rather than alerts.

We can leverage the post-processing alert pipeline to find alerts with a set of facts whose rank meets the threshold to be interesting—even if alone they were not enough to be escalated individually—and determine their viability in the grander scheme of things.

From here, we expand on the original event and begin building a sequence of detections with a more in-depth explanation of what occurred. Once you know what occurred, you can respond with suggested remediation plans.

The Technical Architecture: Making It Work

Here's how this looks in practice:

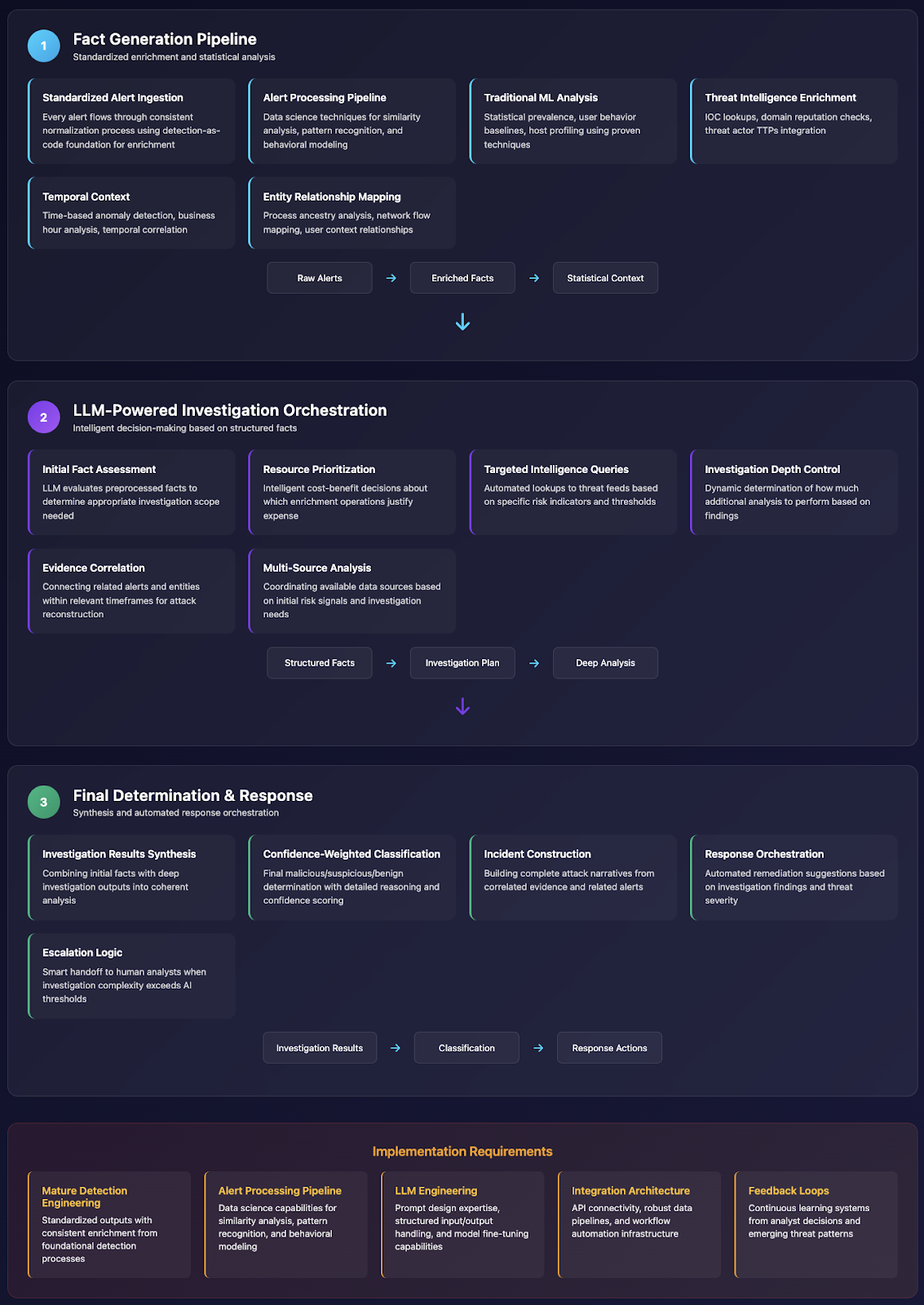

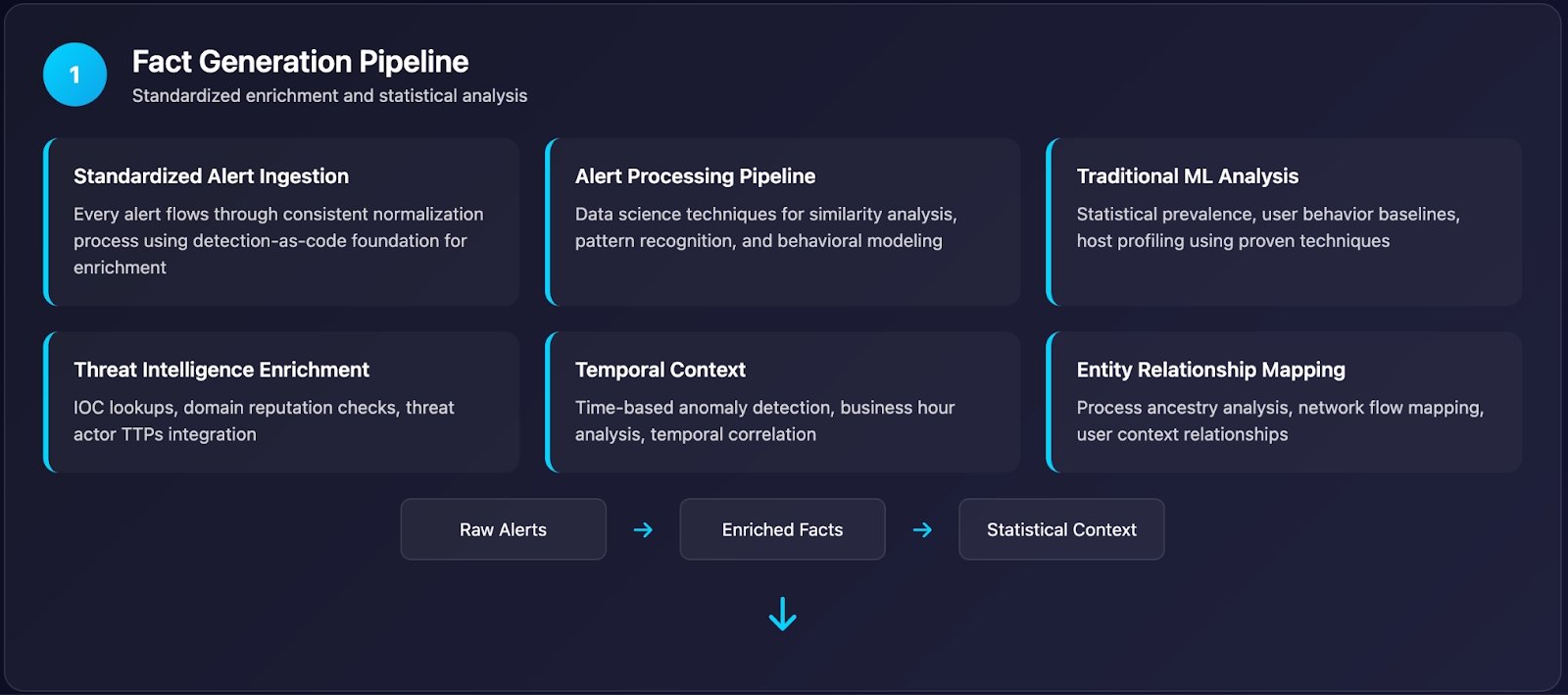

Phase 1: Fact Generation Pipeline

- Standardized Alert Ingestion: Every alert flows through the same normalization process—building on a detection-as-code foundation that ensures consistency and enrichment

- Alert Processing Pipeline: Data science techniques for similarity analysis, pattern recognition, and behavioral modeling

- Traditional ML Analysis: Statistical prevalence, user behavior baselines, host profiling

- Threat Intelligence Enrichment: IOC lookups, domain reputation, threat actor TTPs

- Temporal Context: Time-based anomaly detection, business hour analysis

- Entity Relationship Mapping: Process ancestry, network flow analysis, user context

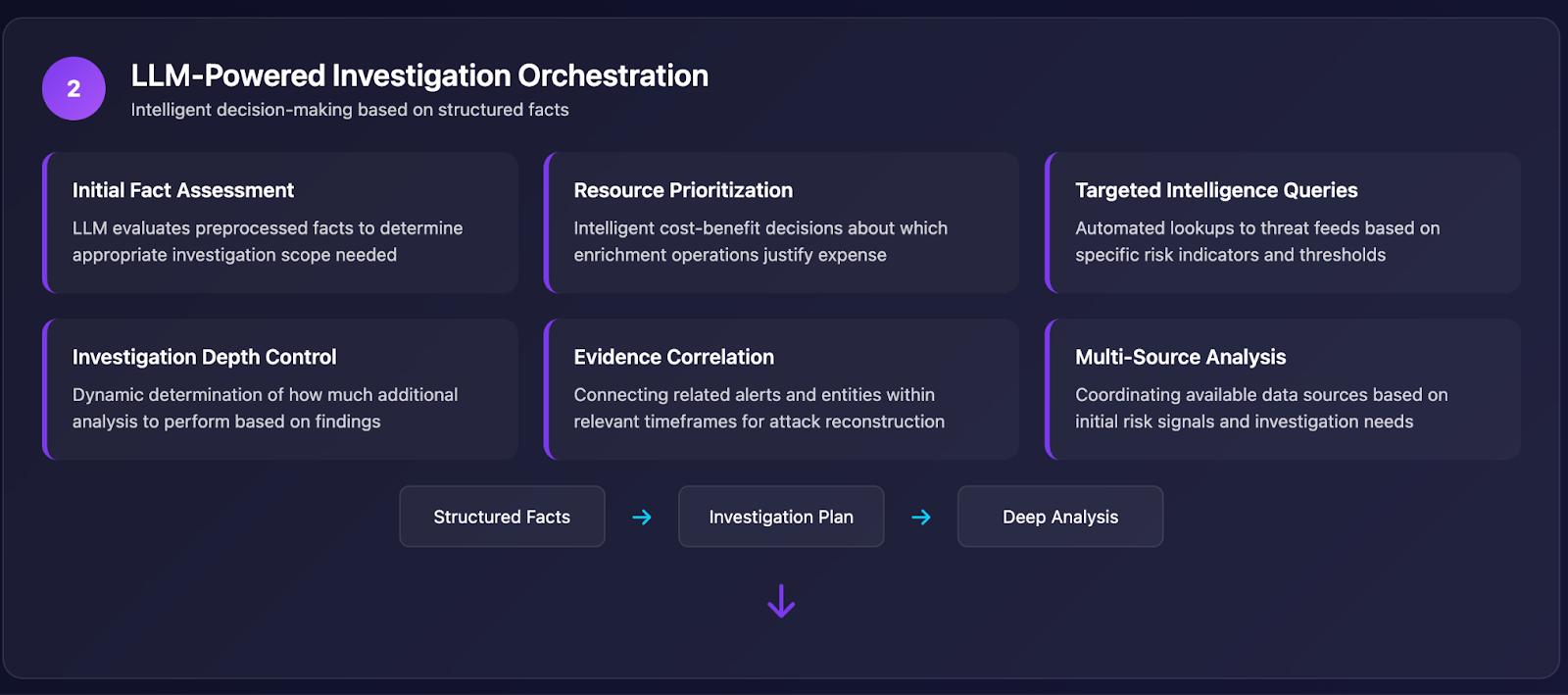

Phase 2: LLM-Powered Investigation Orchestration

- Initial Fact Assessment: LLM evaluates preprocessed facts to determine the investigation scope needed

- Resource Prioritization: Intelligent decisions about which enrichment operations justify the cost

- Targeted Intelligence Queries: Automated lookups to threat feeds based on specific risk indicators

- Investigation Depth Control: Dynamic determination of how much additional analysis to perform

- Evidence Correlation: Connecting related alerts and entities within relevant timeframes

- Multi-Source Analysis: Coordinating available data sources based on initial risk signals

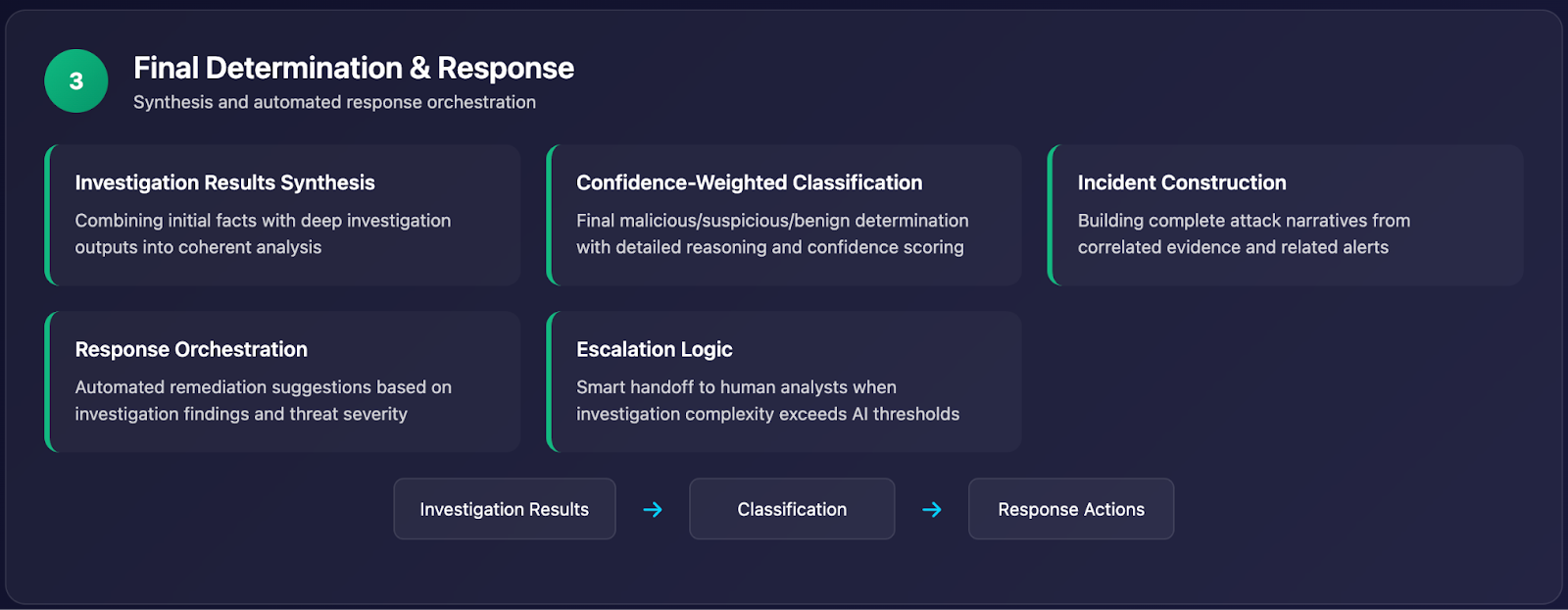

Phase 3: Final Determination & Response

- Investigation Results Synthesis: Combining initial facts with deep investigation outputs

- Confidence-Weighted Classification: Final malicious/suspicious/benign determination with reasoning

- Incident Construction: Building complete attack narratives from correlated evidence

- Response Orchestration: Automated remediation suggestions based on investigation findings

- Escalation Logic: Smart handoff to human analysts when investigation complexity exceeds thresholds

The Economic Reality: Why This Matters

Recent studies show that using proper Security Copilot implementations reduced mean time to resolution by 30%, helping accelerate response times and minimizing the impact of security incidents. But that's just the beginning.

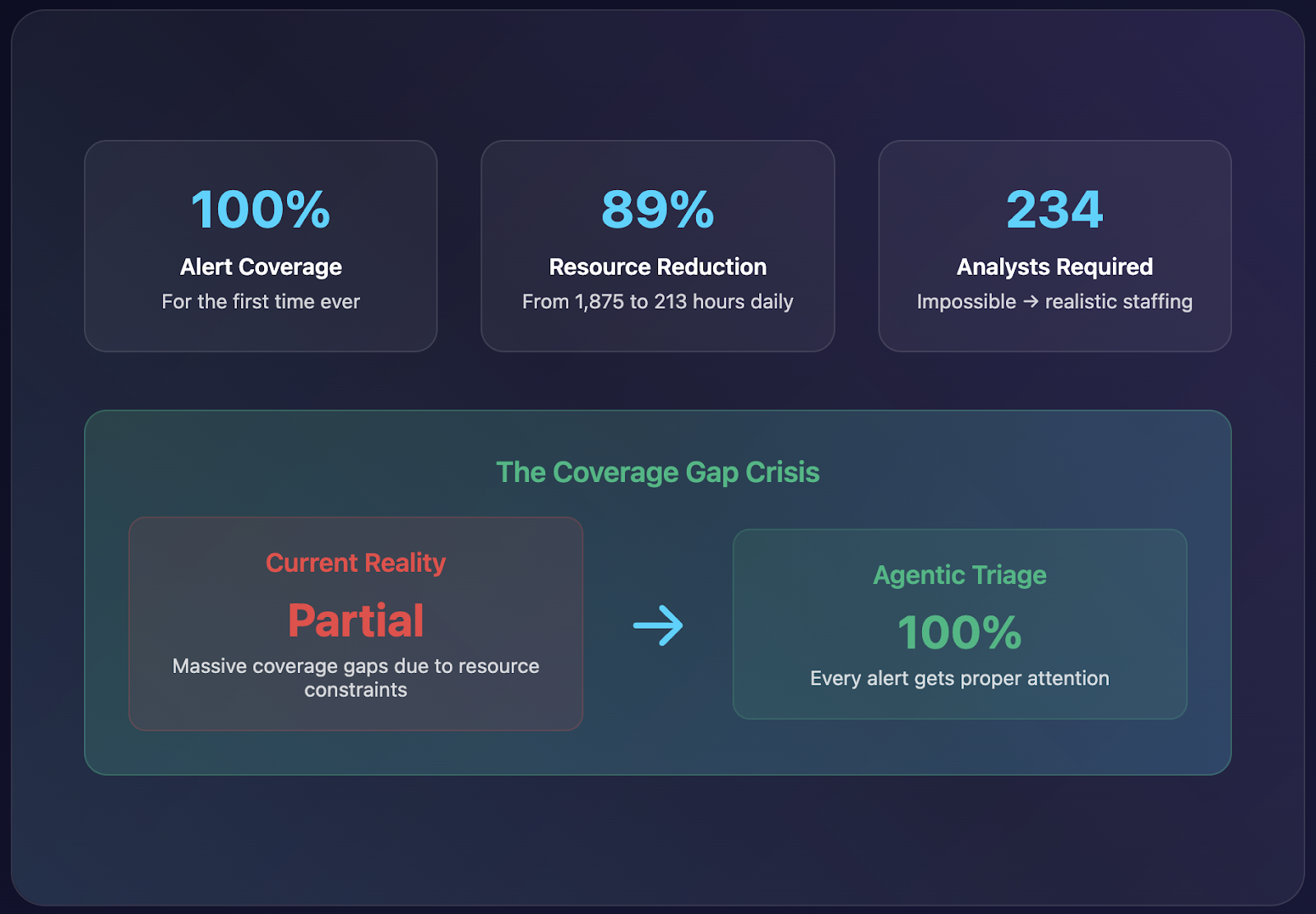

When you drastically reduce investigation time through intelligent automation, you're not just saving 30% of resolution time—you're enabling coverage that was previously impossible. The math reveals the true scope of the problem:

- Current reality: 4,500 alerts × 25 minutes = 1,875 analyst hours daily (requiring 234 full-time analysts per day—completely unrealistic)

- What actually happens: Most SOCs investigate only a fraction of alerts, leaving massive coverage gaps

- Agentic approach: 4,500 alerts × 2 minutes initial processing + intelligent investigation triggers on 500 alerts × 5 minutes + 50 escalated alerts × 25 minutes = 1,292 analyst hours daily (achievable with realistic staffing)

The key difference isn't just efficiency—it's enabling 100% alert coverage for the first time. Rather than leaving thousands of alerts uninvestigated due to resource constraints, intelligent automation ensures every alert gets proper attention while focusing human expertise on genuinely complex cases that warrant deep analysis.

The Human Element: What Changes

By offloading repetitive triage and initial investigations, especially removing the flood of benign alerts from human analyst queue, Agentic AI frees analysts to focus on high-value work like complex investigations and threat hunting. This reduces burnout and improves team retention, a critical factor in a competitive market with persistent skills shortage.

There’s only so many hours in the day, and with noise curtailed, you can focus on what matters most:

- Complex Investigations: Investigation of novel threats that cannot be fully remediated using Agentic AI.

- Incident Response: Utilizing human cycles on the most impactful problems on your network.

- Threat Hunting: Proactive investigation based on agent insights

- Agent Tuning: Improving fact generation and classification logic

- Response Orchestration: Coordinating complex remediation efforts

Implementation Reality Check

It is a monumental effort to ensure that all of the pieces needed for Agentic AI to work are present and working correctly. You need:

- Mature Detection Engineering: Standardized outputs with consistent enrichment—exactly what foundational detection engineering processes coupled with agentic detection engineering deliver automatically

- Alert Processing Pipeline: Data science capabilities for similarity analysis, pattern recognition, and behavioral modeling

- LLM Engineering: Prompt design, structured input/output, model fine-tuning

- Integration Architecture: API connectivity, data pipelines, workflow automation

- Feedback Loops: Continuous learning from analyst decisions and new threat patterns

But here's the thing: if you already use Anvilogic or have implemented agentic detection creation, you've got the foundational layer. Industry analysis suggests that near-complete automated triage is becoming achievable with today's technology, provided the workflow engine can handle hundreds of actions at a time and has the processing power for high-confidence analysis.

The detection outputs provide the structured, enriched inputs that your alert processing pipeline can work with. Without that foundation, even the most sophisticated data science pipeline will struggle with inconsistent, poorly documented detection outputs.

The Foundation Gap in Triage Copilots

Most organizations are implementing triage copilots without the proper foundational infrastructure. These copilots can be powerful tools when built on structured, fact-rich data, but they struggle when working with inconsistent detection outputs and poorly normalized alert schemas. What separates effective copilots from simple chatbots is the quality of data they process.

The difference becomes clear in their outputs:

- Foundation-lacking copilots: "This alert mentions SSH activity. Here's some general information about SSH risks..."

- Well-founded agents: "This alert is benign because prevalence analysis shows normal usage (fact X), user is in authorized group (fact Y), and activity matches established baseline (fact Z). Automatically closed. Next."

- Well-founded agents with escalation: "This alert is potentially malicious because process execution is anomalous (fact X), occurred outside business hours (fact Y), and destination IP has threat intelligence matches (fact Z). Recommend analyst review. Next."

When your triage system can process structured facts and deliver confident determinations with clear reasoning—whether that's automatic closure or intelligent escalation—you've moved beyond basic assistance into true analytical augmentation for cybersecurity.

Looking Forward: The Inevitable Evolution

Agentic AI is transforming SOCs by autonomously handling the majority of alert triage and initial investigations, dramatically reducing analyst burnout while improving detection speed and accuracy. This isn't a future vision—it's happening now for organizations with the right foundation.

The organizations that will thrive are those building end-to-end agentic systems: from detection creation that compresses the scientific method into minutes, to triage automation that handles routine investigations while empowering analysts to focus on complex threats. When your detection agent produces standardized, fact-rich alerts that feed directly into your triage agent's classification engine, you've created a closed-loop system that scales exponentially.

The triage revolution isn't about better copilots—it's about empowering analysts with intelligent systems that handle the routine work, escalate the complex cases with rich context, and free human expertise for the strategic thinking that truly requires creativity and judgment.

Ready to empower your analysts instead of overwhelming them? If you've already implemented agentic detection engineering, you're most of the way there. If you haven't, start with automating the detection creation process first—because without standardized, fact-rich inputs, even the best triage agent is just another copilot struggling with inconsistent data.

.svg)

.png)

.svg)